I spent two years writing a book about acceleration. As a futurist, I thought it was time to put my thoughts on paper, but here's the problem with being a futurist: it's impossible to write about the future. By the time the book is published, the future is already the past. So instead of focusing on what's coming, I decided to focus on the patterns that lead to possible futures-the patterns of acceleration themselves. I planned for 280 pages and ended up with 800. Somewhere around page 400, I understood why: I was documenting a world that has lost the ability to see itself.

We live inside acceleration patterns so complex, so interwoven, that we've stopped trying to understand them as patterns at all. Instead, we've done what happens when complexity exceeds processing capacity-we've narrowed our vision to what's directly in front of us. The quarterly report. The election cycle. The next funding round. We've become insects navigating by local gradients, oblivious to the global topology.

The people who run our world measure everything. Tech companies track user engagement in microseconds. Markets move billions on sentiment that shifts by the hour. These aren't just different clocks running at different speeds-they're different species of time, incommensurable and increasingly hostile to translation. And we pretend this is fine. We pretend that expertise means burrowing deeper into your silo while the silos themselves are collapsing into each other.

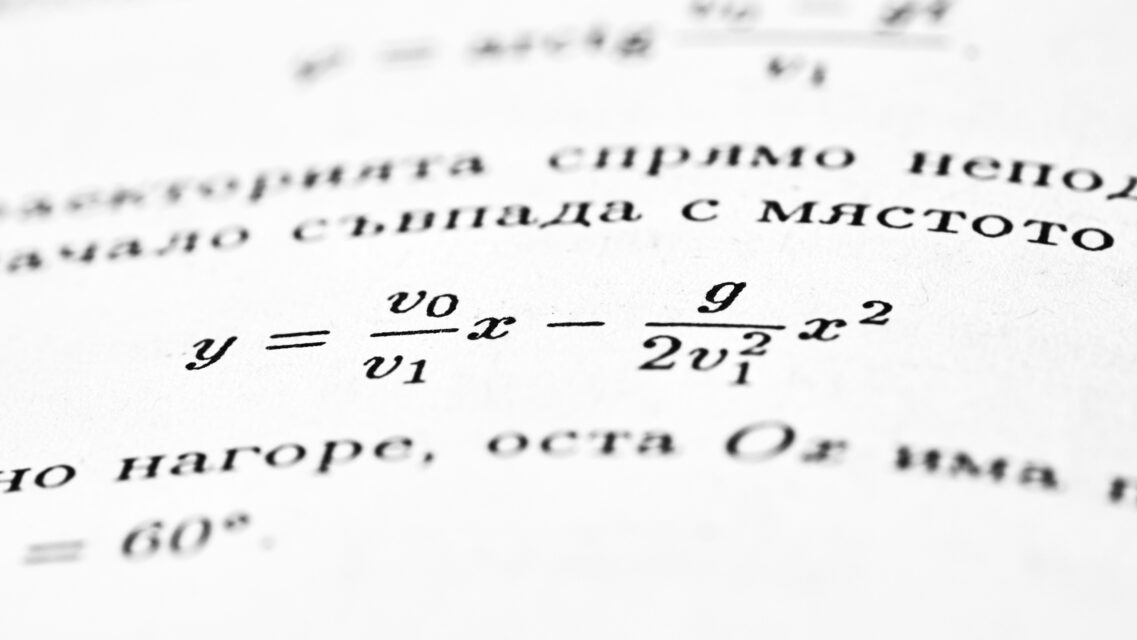

The paradox is this: we have more data than ever before, more computational power, more sophisticated models. Climate scientists can model atmospheric carbon in parts per million. Policy analysts can track sentiment shifts in real-time across millions of data points. Financial systems can execute trades in microseconds based on algorithmic predictions. Yet this wealth of measurement has not translated into comprehension. We're tracking symptoms with extraordinary precision while the underlying patterns-the ones that actually determine outcomes-remain invisible to us. This isn't an information problem. It's a perception problem. We're looking at the world through instruments calibrated for a reality that no longer exists.

When I tell people we need a unified framework for understanding acceleration, they inevitably ask for the playbook. They want the model that worked somewhere else, the success story they can copy, the five steps to pattern recognition. This is the exact pathology I'm describing. Success stories are corpses. They're what's left after the pattern has finished with them. You cannot copy them because by the time you're looking at them, the conditions that created them have already moved on. Studying Google's rise tells you nothing about what to build now except what not to build. The pattern that produced Google is dead. The world it operated in is gone.

What you can study-what you must study if you want to do anything other than thrash-are preconditions. Not outcomes, but the topology of conditions that made the outcome possible. This is harder than it sounds because it requires something we've systematically trained ourselves not to do: observe from a distance we can't compute our way back from. You cannot see patterns while standing inside them. You cannot recognize the shape of the system from a position within the system. You need intellectual distance, and intellectual distance makes you useless for quarterly optimization.

This creates a cruel bind. The organizations that most need to understand acceleration patterns are the ones least capable of developing that understanding. They're optimized for execution within known parameters, not for the kind of systemic observation that reveals when the parameters themselves are shifting. They reward people who can improve metrics by 3% this quarter, not people who notice that the metrics are measuring the wrong things entirely. And so we continue, refining our ability to navigate a map while the territory transforms beneath our feet.

Here's an example that caught me off-guard. Last August, Bulgaria hosted the world's first International Olympiad in Artificial Intelligence. Nearly 200 students from over 40 countries gathered in Burgas-a Black Sea city most Americans couldn't find with a map and a bet-to compete in both scientific and practical AI challenges. Bulgaria has done this before. They hosted the first International Olympiad in Informatics in 1989, back when most of the world thought computers were for payroll. But the pattern isn't that Bulgaria likes hosting olympiads.

The pattern is this: Bulgaria is a country of seven million people that has built a thriving tech ecosystem-with over €1 billion invested in startups between 2019-2023-by positioning itself at the intersection of acceleration vectors rather than at the center of any single one. It's in the EU but costs a fraction of Munich or Paris. It produces a significant number of technical graduates a year with a Soviet-era emphasis on mathematics that never quite died. It's small enough that you can see the whole system at once, yet connected enough that every major pattern passes through. This is what intellectual distance looks like when it's geographic. From the crossroads, you see roads. From the capital, you see only yourself.

While American think tanks were writing papers about AI ethics and Chinese labs were optimizing for benchmark supremacy, Bulgaria was training teenagers to build with AI while simultaneously thinking about its implications. They brought in Maria Ilieva, a pop star, to make the olympiad culturally resonant. They structured the competition around both scientific rigor and creative application. They understood that the pattern isn't technological acceleration-it's the convergence of capability, education, ethics, and culture happening simultaneously across registers. Miss one thread and you're not seeing the pattern. You're seeing your field's projection of the pattern, which is another way of being blind.

This matters because we are making catastrophically important decisions while pattern-blind. When AI transforms labor markets, that's not a technology story-it's a cascade through education policy, social cohesion, geopolitical power, and basic questions about human meaning. When climate shifts, that's not an environmental story-it's agriculture, migration, energy infrastructure, and war. These accelerations don't happen in parallel. They happen to each other. They amplify and interfere and create emergent patterns that none of our expertise is designed to recognize.

We're trying to understand a game whose rules change based on how we play it-while playing twelve other games simultaneously, each affecting the others' outcomes. And we're doing this with leadership trained to optimize within known, bounded systems. We're doing this with institutional structures designed for a world where domains were separable, where cause and effect were linear, where expertise meant deep knowledge of a stable field. That world is gone.

I don't mean this as criticism of individuals. The problem is structural. We've built an entire civilization on short time horizons. Quarterly earnings drive decisions with decade-long consequences. Election cycles force politicians to optimize for polls rather than for outcomes they won't live to see judged. Universities reward specialization so deep that adjacent fields become mutually unintelligible. Venture capital optimizes for exits, not for building institutions that can survive the disruptions they're funding. The machinery we've built to run the world is fundamentally mismatched to the world we're running.

Think about what this means in practice. A CEO with a fiduciary duty to maximize quarterly returns cannot make decisions optimized for decade-long pattern recognition. A politician facing reelection cannot advocate for policies whose benefits won't materialize for fifteen years. A researcher whose tenure depends on publication counts in narrowly-defined journals cannot step back far enough to see how their field intersects with others. These aren't failures of character. They're structural impossibilities. We've created a system where the rational choice at every level produces collective blindness at the system level.

Cross-pollination-the bastard child of multiple accelerations colliding-is rewriting everything. AI development depends on semiconductor supply chains, which depend on geopolitical stability, which depends on economic models that AI is actively dissolving. It's a recursive loop eating its own tail, and we're trying to understand it with tools designed for linear causality. Every expert speaks a dialect incomprehensible to experts three fields away. The distance between molecular biology and macroeconomics is now smaller in reality than it is in our institutional architecture. The university has departments. The world has none.

Consider the implications. A breakthrough in quantum computing affects encryption, which affects financial systems, which affects geopolitical power, which affects research funding, which affects what quantum computing research gets done. The loop closes. And this is just one thread among thousands, all interacting, all accelerating. We need people who can hold this complexity without collapsing it into false simplifications. We need institutions that can operate at multiple timescales simultaneously. We need frameworks that can map the territory of acceleration without pretending it's simpler than it is.

What would a unified framework look like? After two years, I can tell you what it cannot be: a simplification. We don't need to reduce complexity. We need to develop a shared language for pattern recognition that works across domains without flattening them. We need to map preconditions, not outcomes. We need tools for thinking at multiple timescales simultaneously-holding the next quarter and the next quarter-century in mind and understanding them as parts of one system.

This is not a technical problem. It's a cognitive one. It requires admitting that the intellectual distance needed to see patterns makes you worse at quarterly optimization. You cannot do both. A person scanning for emergent systemic patterns is not also maximizing this quarter's metrics. An institution devoted to understanding acceleration is not also optimizing its immediate outputs. We need people and institutions with the mandate to be useless in the short term. We need to create space for thinking that doesn't immediately convert to action, for observation that doesn't immediately optimize a metric, for understanding that doesn't immediately produce a deliverable.

This will feel like waste to systems optimized for efficiency. It will feel like luxury to organizations fighting for survival. But it's neither. It's the minimum price of navigation in a world where the terrain is changing faster than maps can be redrawn. The alternative isn't efficiency. It's blindness with better metrics.

The organizations and countries that develop this capacity won't be the ones at the center. Centers are where you go to be blind. Bulgaria's example is instructive precisely because it's peripheral. The periphery sees clearly because it has no choice. When you're at the crossroads rather than the capital, you watch the traffic because your survival depends on it. The advantage isn't resources. It's not being important enough to afford delusion.

This creates an opening. Small countries, peripheral institutions, organizations without the weight of legacy success-these have structural advantages in an age of acceleration. They can pivot faster. They can experiment with less to lose. They can see patterns precisely because they haven't optimized themselves into blindness. The next wave of genuine innovation won't come from the centers doubling down on what made them central. It will come from the edges, where people are still paying attention because they have to.

What Bulgaria teaches us isn't replicable as policy, but it's legible as principle. You cannot copy their playbook-the conditions that made it work are already shifting. But you can study the preconditions: geographic positioning at intersections rather than centers, institutional humility that comes from never having been dominant enough to stop paying attention, and the willingness to integrate seemingly separate threads-technical education, cultural resonance, ethical consideration-into a single coherent vision. Bulgaria succeeded not by trying to compete with Silicon Valley or Shenzhen on their terms, but by recognizing that the periphery offers something centers cannot buy: the necessity of pattern recognition. When your survival depends on watching the traffic, you learn to see roads the capitals have forgotten exist. The lesson isn't "be like Bulgaria." It's simpler and harder: position yourself where you're forced to see clearly, then build for the patterns you observe rather than the outcomes you envy. Small countries aren't the only ones who can do this-but they're often the only ones who must.

I'm not optimistic. The incentives are wrong. The timescales are wrong. The metrics are catastrophically wrong. Whether we develop a unified framework or not, the patterns will continue. They don't need our permission or comprehension. The question is whether we'll be making decisions with any sense of what we're doing, or whether we'll keep stumbling through a dark room rearranging furniture we think we recognize by touch, never realizing the room itself is shrinking.

How many connections we've failed to make. How many preconditions we've misunderstood because we were studying outcomes. The book's back cover says, "This book is not for you. Don't buy it." I meant it as warning: this isn't a quick read or a simple answer. But perhaps what I should have said is simpler. If you're not willing to step back far enough to see the patterns, no amount of data will save you. Measurement is not comprehension. Metrics are not understanding. And right now, we're measuring everything while comprehending almost nothing.

The patterns are already here. They're interacting, amplifying, creating emergent structures we have no language for. We're just not watching them. Not with the distance required. Not with the humility to admit that what made you successful in 2015 might make you extinct in 2025. Every quarter we spend optimizing for metrics that miss the pattern is a quarter we lose in a game whose rules we're too close to read. And the distance between where we are and where we need to be to see clearly is growing faster than we're capable of moving.

Pattern recognition isn't optional anymore. It's the price of survival in a world where everything connects to everything else faster than expertise can adapt. We either learn to see the whole board, or we'll keep making brilliant local moves that add up to comprehensive, irreversible catastrophe. The choice is still ours. The patterns don't care either way.